#Build a webscraper node how to

Since we already know how to parse the HTML, the next step is to build a nice public interface we can export into a module.

Take the h2.title element and show the text console.log($( "h2.title").text()) īecause I like to modularize all the things, I created cheerio-req which is basically tinyreq combined with cheerio (basically the previous two steps put together): const cheerioReq = require( "cheerio-req") Parse the HTML let $ = cheerio.load( "Hello world") It provides a jQuery-like interface to interact with a piece of HTML you already have. Once we have a piece of HTML, we need to parse it. Once you have all the dependencies, youre going to need a webpage that you will scrape.

#Build a webscraper node install

If you dont have npm installed yet then follow the instructions here to install node and npm. Installing them will work like this: npm install -save request cheerio promise. Tinyreq is actually a friendlier wrapper around the native http.request built-in solution. The ones I have used are: request, cheerio and promise.

Node.js is a server environment that supports running JavaScript code in the terminal, the server will be created with it. Open up the folder in VScode, it should be empty at this point, before adding the necessary files to your project you need to ensure that Node.js is installed. Using this module, you can easily get the HTML rendered by the server from a web page: const request = require( "tinyreq") Ĭonsole.log(err || body) // Print out the HTML Name it Custom Web Scraper or whatever name you’d prefer. Like always, I recommend choosing simple/small modules - I wrote a tiny package that does it: tinyreq. There are a lot of modules doing that that. To load the web page, we need to use a library that makes HTTP(s) requests. It's designed to be really simple to use and still is quite minimalist. Thats great I can do want ever I want with thhis data, I can regex it to get the actual names or just use the html as it is.

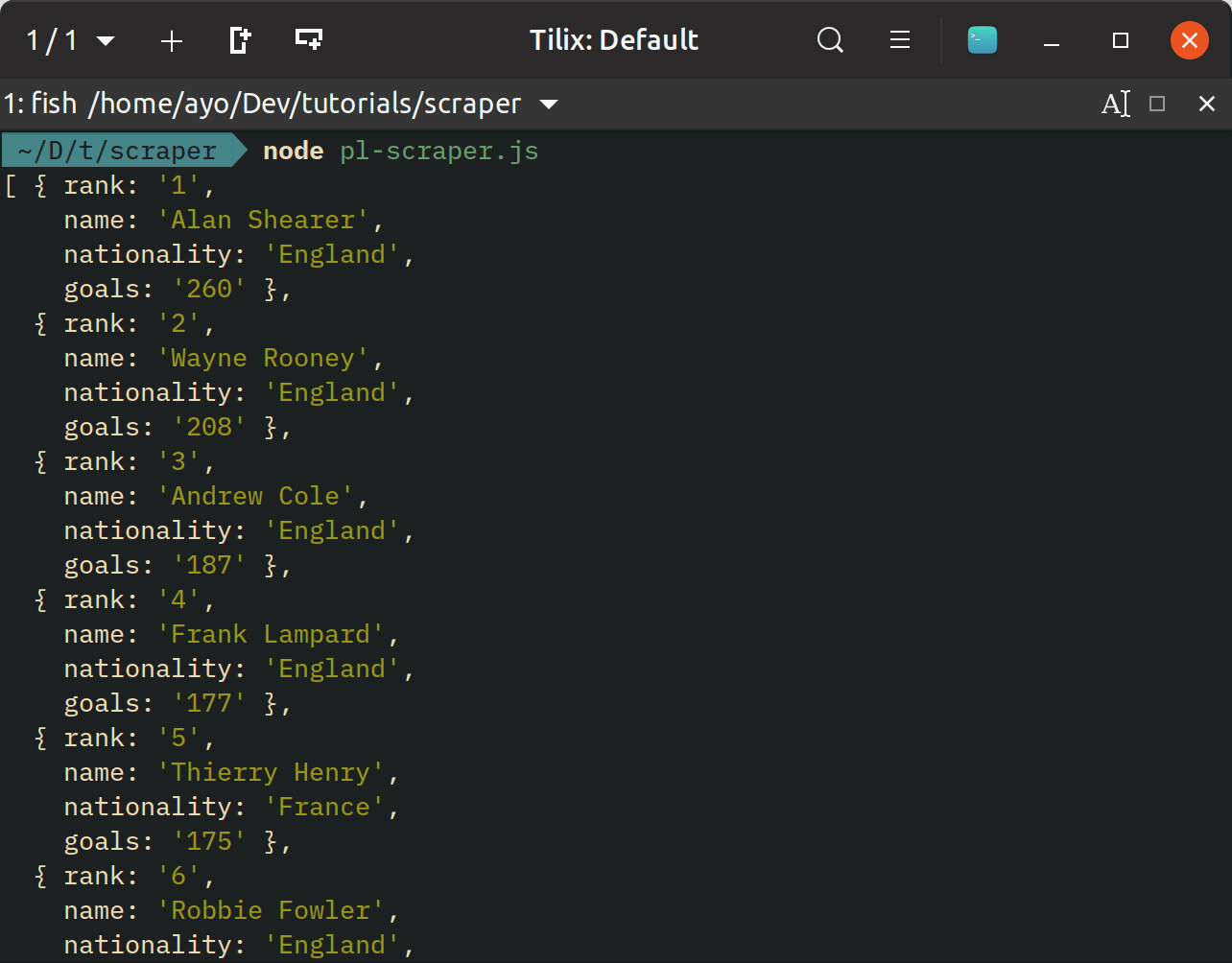

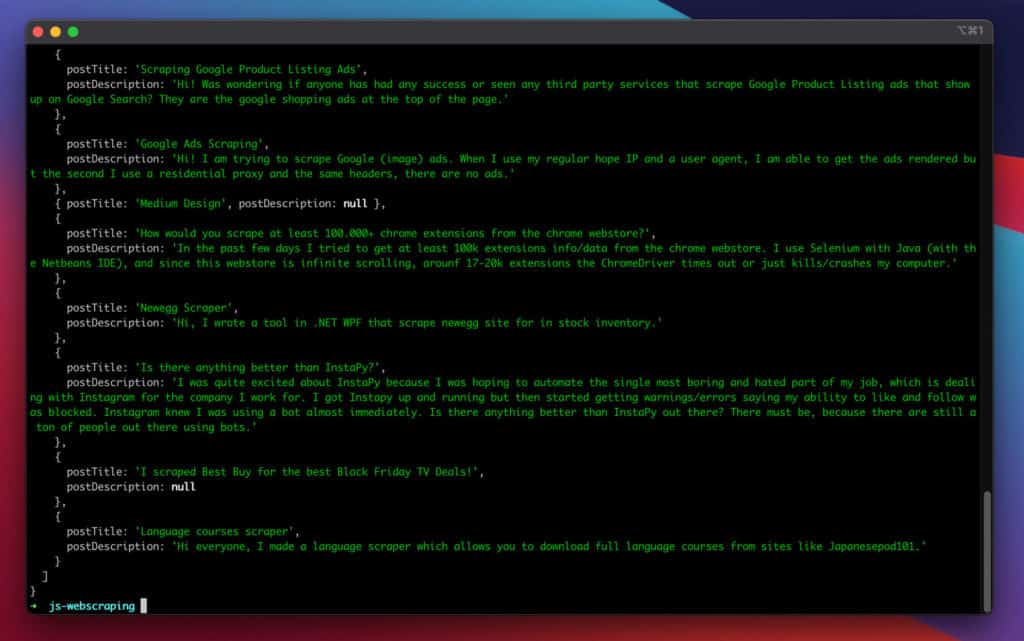

In Node.js, all these three steps are quite easy because the functionality is already made for us in different modules, by different developers.īecause I often scrape random websites, I created yet another scraper: scrape-it – a Node.js scraper for humans. Now if I console.log lipsticksNameDiv and run 'node index.js' in the terminal, I should have a list of all the divs that have class '.shade-picker-floatcolors'.

0 kommentar(er)

0 kommentar(er)